I think this is a challenging question, but one that can be thought through in a detailed way. The conclusion I'm going to defend is that a universal grammar that looks anything like what Chomskyans expect will be analytic a priori knowledge -- assuming those terms are indeed well-defined. I'll do my best to select fairly robust definitions of those terms, but keep in mind that anyone who rejects the existence of a priori knowledge, or who rejects the analytic-synthetic distinction, will reject my conclusion as meaningless or ill-formed.

I'll also discuss the lingering possibility that knowledge of a universal grammar might indeed be synthetic a priori knowledge, and what one would have to demonstrate to persuade me of that claim.

Space does not permit a full development of the argument I want to make, so take what I'm offering here only as a rough sketch -- in two parts. I'll begin by talking about aprioricity; then I'll talk about analyticity.

Knowledge of Universal Grammar is A Priori

First, I want to defend the position that if anything is a priori knowledge, then any well-formed universal grammar is a priori knowledge -- regardless of whether it is "innate." The argument is very simple and goes like this:

- If a priori knowledge exists at all, then any knowledge that we can mathematically formalize is a priori knowledge.

- A "well-formed universal grammar" is a syntactic structure that we can mathematically formalize.

The desired conclusion immediately follows. Recall that "a priori" knowledge is not necessarily innate knowledge -- it's simply knowledge that can be verified as true without having to turn to experience. (That might always count as "innate" depending on what your definition of "innate" is; but let's not get into that!)

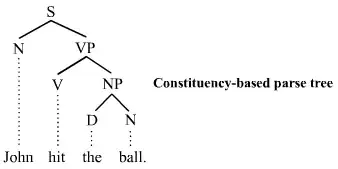

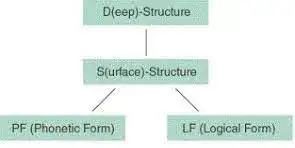

Now, premise one seems indispensable if we're using these terms in ways that are even close to standard. Premise two is defensible because the whole point of Chomskyan grammars is that they can be formalized; for example, transformational grammars can be formalized as tree automata. So if the Chomskyan program is on the right track, then the particular universal grammar inside the heads of all humans is mathematically formalizable, and is therefore a priori knowledge.

Now, what if this grammar isn't really universal? What if different people have different grammars in their heads? I don't think that would change anything. If we have multiple differing grammars inside our heads, they all should still count as a priori knowledge if they are mathematically formalizable. But if there are no mathematically formalizable grammars in our heads, then the Chomskyan program is on the wrong track, and the question stops being coherent. (We would still have a priori knowledge of things like context-free grammars, transformational grammars, pushdown automata, and tree automata! They just wouldn't have any particular relation to the grammars of natural human language.)

Knowledge of Universal Grammar is Analytic

The difficult part of this question is whether our knowledge of a well-formed universal grammar would be synthetic or analytic. Here again, we have to accept that the distinction exists; otherwise the question is incoherent. But what might the distinction mean in this case? In particular, we need a precise understanding of the term "analytic." Then we need to understand what it takes for a priori knowledge to be synthetic. This last problem is very difficult, and I think the best approach is to look at what might make mathematical knowledge synthetic rather than analytic from a post-Fregean point of view.

So I'll begin by turning to Frege's account of analyticity, which is usefully summarized by the SEP. In short, Frege tries to clarify the notion of "containment" that Kant uses to define analyticity. According to Kant, an analytic statement is one that states a fact already contained in the definitions of the terms it uses. So the statement "all bachelors are unmarried" is analytic, but the statement "all bachelors are sad" is synthetic. Frege attempted to refine this definition by linking it to the idea of formal or logical equivalence. If, by a process of purely formal substitution, one can derive a statement from a set of given prior terms, then that statement is analytic.

Now, Frege's hope was that he could show that all arithmetical knowledge was analytic. But there's a convincing argument that he failed. This argument has to do with the problem of the actual existence of mathematical entities. Frege's system explicitly commits itself to the existence of mathematical entities, but the justification for that commitment must be synthetic!

Why should we believe that? Because for any given formalization of arithmetic, there exist diophantine equations that do not have solutions, but that cannot be proven unsolvable within that formalization. Since diophantine equations are really quite elementary components of mathematics, we would like a commitment to the existence of mathematical entities to include a commitment to the existence of diophantine equations. And if we are committed to the existence of those equations, then we would like there to be a fact of the matter whether or not any given diophantine equation is solvable. But if we depend only on analytic knowledge of mathematics -- if we rely only on formalization -- then we have to accept that in some cases, there is not a fact of the matter whether a particular diophantine equation is solvable. The conclusion that there is a fact of the matter is an inescapably synthetic judgment -- it posits the existence of Something outside of the formal system of definitions and substitutions that describes it. But because that Something is strictly mathematical in nature, it seems unreasonable to describe our knowledge of it as a posteriori -- unless you reject the idea of a priori knowledge altogether.

If you don't want to confront this problem, then you don't have to commit yourself to the existence of mathematical entities, but you then give up some kinds of certainty. If you don't want to make that sacrifice, then you have good reason to accept the claim that at least some mathematical knowledge is synthetic a priori knowledge.

So to sum up, it seems we need to say yes to at least three questions to make a convincing claim that some knowledge of X is synthetic a priori knowledge.

- Is there a truth about X that the formal definition of X doesn't already "contain"?

- Do we feel a strong motivation to accept that truth rather than remaining agnostic?

- Is that truth indeed a priori?

Applying these three questions to a hypothetical Chomskyan universal grammar, I think the answer is probably no in all three cases. Now this is where my argument breaks down a bit, because of course there is no established universal grammar yet. It may turn out that linguists discover the actual universal grammar, and find that 1, 2, and 3 are all true of it. But I see no particular reason to accept that conclusion yet!

Furthermore, there as been at least some speculation that universal grammar is itself the very paradigm of analyticity. In this account, it is precisely the structure of the universal grammar that gives us our understanding of analytic truth. In that case, it would seem strange that our knowledge of universal grammar is itself synthetic. On the other hand, there doesn't seem to be a strong reason to assume that it is not. Perhaps the best route is to remain agnostic on the matter. But if I had to place a bet, I'd bet that our knowledge of universal grammar, such as it is, is analytic.