I was mirroring a site using HHTrack, and it ran for some time and stopped with log containing the following:

<snip>

Too many URLs, giving up..(>100000)

To avoid that: use #L option for more links (example: -#L1000000)

14:48:58 Info: Top index rebuilt (done)

Does it mean it didn't mirror all pages?

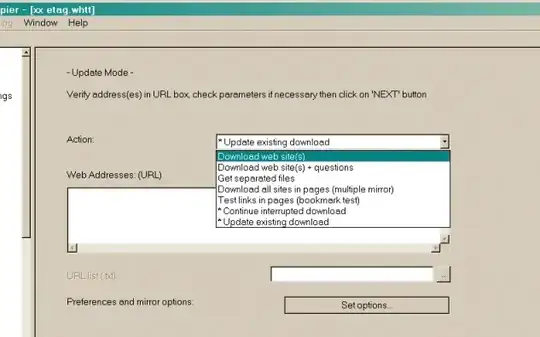

How do I continue the mirroring without spending unnecessary time copying the already-mirrored files?