Context

I'm compressing ~1.3 GB folders each filled with 1440 JSON files and find that there's a 15-fold difference between using the tar command and Python's built-in tarfile library on macOS or Raspbian 10 (Buster)

Minimal working example

This script compares both methods:

#!/usr/bin/env python3

from pathlib import Path

from subprocess import call

import tarfile

fullpath = Path("/Users/user/Desktop/temp/tar/2021-03-11")

zsh_out = Path(fullpath.parent, "zsh-archive.tar.xz")

py_out = Path(fullpath.parent, "py-archive.tar.xz")

# tar using terminal

# tar cJf zsh-archive.tar.xz folderpath

call(["tar", "cJf", zsh_out, fullpath])

# tar using tarfile library

with tarfile.open(py_out, "w:xz") as tar:

tar.add(fullpath, arcname=fullpath.stem)

# Print filesizes

print(f"zsh tar filesize: {round(Path(zsh_out).stat().st_size/(1024*1024), 2)} MB")

print(f"py tar filesize: {round(Path(py_out).stat().st_size/(1024*1024), 2)} MB")

The output is:

zsh tar filesize: 23.7 MB

py tar filesize: 1.49 MB

The versions I use are as follows:

taron macOS:bsdtar 3.3.2 - libarchive 3.3.2 zlib/1.2.11 liblzma/5.0.5 bz2lib/1.0.6taron Raspbian 10:xz (XZ Utils) 5.2.4 liblzma 5.2.4tarfilePython library:0.9.0

Things I've tried

After compression, I've extracted both archives and compared the resulting folder with:

diff -r py-archive-expanded zsh-archive-expanded

There was no difference.

If I compare the two tar archives directly, they seem different:

➜ diff zsh-archive.tar.xz py-archive.tar.xz

Binary files zsh-archive.tar.xz and py-archive.tar.xz differ

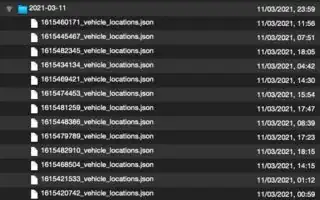

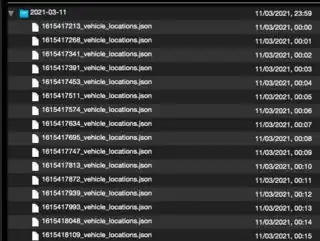

If I inspect the archives with Quicklook (and the Betterzip plugin) I see that the files in the archive are ordered in a different way:

Left is zsh-archive.tar.xz, right is py-archive.tar.xz:

The zsh archive uses an unknown order, and the Python archive orders the file by modification date. I am not sure if that matters.

Question

What is going on? Am I losing something by using the Python library to compress my data? Is the 15-fold difference in size an indicator of some issue? Or can I safely go ahead and use the efficient Python implementation?