A user on Reddit was told by the artificial intelligence ChatGPT that solipsism is true. Why did he say that?

Is there any evidence of solipsism that ChatGPT knows about?

Should ChatGPT be trusted or is it wrong?

A user on Reddit was told by the artificial intelligence ChatGPT that solipsism is true. Why did he say that?

Is there any evidence of solipsism that ChatGPT knows about?

Should ChatGPT be trusted or is it wrong?

Unequivocally, due to the way it currently operates, ChatGPT should not be trusted at the moment. It is about as equally likely to produce information as misinformation. In fact, it currently has no sense of what is true and what is wrong, something I have experienced personally, and which is surfacing more and more.

In my personal experience, I've seen ChatGPT produce:

and more ...

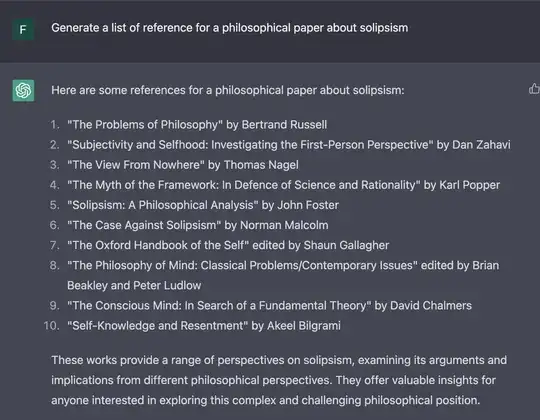

Here is a list of references about "solipsism" generated by ChatGPT just now:

I was unable to find some of the books mentioned in that list on Amazon.

In the end, what ChatGPT does is only a collage of what it has seen in its training data, with no verification of whether the result is accurate, coherent, consistent, logical, meaningful or trustworthy. Check the article I point to, it's illuminating: ChatGPT will generate any scientific paper you want, with extensive list of ... entirely fake references. That should give pause to anybody who wants to use ChatGPT as an authoritative source.

It's possible those problems all get overcome in the future, but right now, they are glaring issues that can't be avoided.