I have a chess GUI which I've recently been updating to do preemptive searches (that is, depth searches for all possible opponent moves) that I'd like to speed up, since the bot searches go to at least depth 16 for every move, and each move runs a new Stockfish 11 command line (with minimum settings) in a separate thread.

Now, I have some solutions for the threading issue, but one would involve knowing more chess math than I know now.

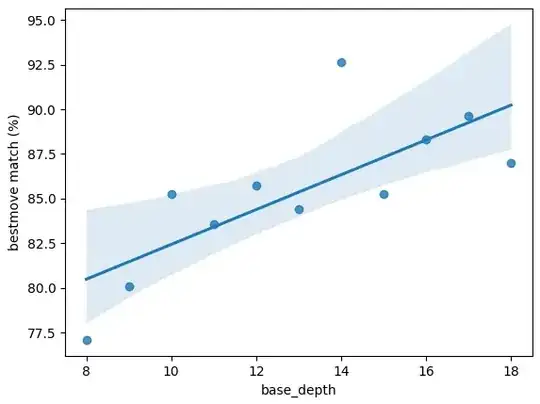

I noticed that, after a certain search depth, the PV's first move, and then first few moves, really don't change that often, and I'd like to know if there's a formula out there, similar to one that I used to calculate winning odds.

I considered the following:

- Do a depth search of n on the position after hypothetical opponent move m.

- Retrieve the pv for depth n.

- Append it to a corpus.

- Do a depth search of n+1 on the same position.

- Retrieve the pv for depth n+1.

- If a text prediction algorithm using the corpus will produce n+1[pv][0:x], such that x is sufficiently large (needs more math) to be reliable, cease the depth searches and recommend n+1[pv][0].

I'm thinking that there might be a simpler way of reaching a similar outcome, since pv[0] would have to arise every time in the text prediction.

What other Stockfish info might I want to consider in generating a statistical confidence that the recommendation need not search any deeper?